October 27, 2010

October 27, 2010 What Artists Should Know About SoundOut

You know your song is great, but is it a hit? Will it inspire listeners to share it with their friends, hand over their email address, or maybe even open their wallets? You need feedback from average music fans who have nothing to lose by being honest.

SoundOut compares your song to 50,000 others from both major labels and indies. They promise to tell you how good your track is with guaranteed 95% accuracy (I’m still trying to wrap my brain around what that means). Starting at $40, they compile the results of 80 reviews into an easy-to-read PDF report. Top rated artists are considered for additional publishing and promotional opportunities.

The head of business development invited me to try out the service for free with three 24-hour “Express Reports” (a $150 value). I used the feedback from my Jango focus group to select the best and worst tracks I recorded for my last album, along with my personal favorite, an 8-minute progressive house epic. You can download all three of my PDF reports here.

Summary of Results

I can describe the results in one word: brutal. None of the songs are deemed worthy of being album tracks, much less singles. In the most important metric, Market Potential, my best song received a 54%, my worst 39%, and my favorite a pathetic 20%. Those numbers stand in stark contrast to my stats at Jango, for reasons I’ll explain in a bit.

Despite the huge swing in percentages, the track ratings only vary from 4.7 to 5.9, which implies Market Potential scores of 47% to 59%. For better or for worse, those scores are weighted using “computational forensic linguistic technology and other proprietary SoundOut techniques.” Even the track rating score is weighted! I would love to see a raw average of the 80 reviewers’ 0-10 point ratings, because I don’t trust the algorithms. The verbal smokescreen used to describe them doesn’t exactly inspire confidence (isn’t any numerical analysis “computational”?).

Perhaps to soften the blow, the bottom of the page lists three songs by well known artists in the same genre that have similar market potential. Translation: your songs suck, but so do these others by major label acts you look up to. Curiously, two of the same songs are listed on my 39% and 20% reports, which casts further doubt on the underlying algorithms.

Detailed Feedback

I found the Detailed Feedback page to be the most useful. It tells you who liked your song based on age group and gender. I don’t know exactly what “like” translates to on a 10-point scale, but it makes sense that 25-34 year-olds rate my retro 80’s song higher than 16-24 year-olds, since the former were actually around back then.

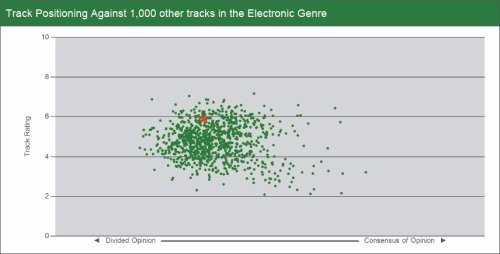

The track positioning chart maps your song relative to 1,000 others in the genre, based on rating and consensus of opinion. It’s a clean and intuitive representation of how your song stacks up to the competition. Still, it would be nice to know what criteria (if any) was used to select those 1,000 tracks.

Review Analysis

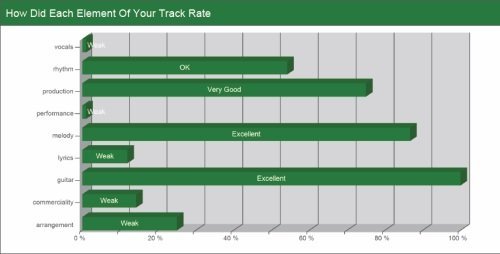

The Review Analysis section is utterly useless. The elements listed change from song to song. The only element that was consistently judged excellent is guitar, which is quite generous considering there’s no guitar in any of my songs.

The actual reviews are no better or worse than the comments on my Jango profile. They ranged from overly enthusiastic (“THIS SONG WAS GREAT I REALLY LIKED IT IT HAD A GOOD BEAT TO IT I MY HAVE TO DOWNLOAD IT MYSLEF”) to passive aggressive (“this song wasn’t as bad as it could be”). At the very least, the reviews prove there are real people behind the numbers.

Unfortunately for me, they don’t appear to be fans of electronic music. Not a single reviewer mentioned an electronic act. Instead of the usual comparisons to The Postal Service, Owl City, and Depeche Mode, I got Michael Jackson, Whitney Houston(!), and Alan Parsons Project.

Scouting for Fame and Fortune

As puzzling as the mention of guitar in the review analysis was, it was a comment about my “20% song” that convinced me to review the review process. It said “the lack of vocals is a shame.” Those seven words reveal a key flaw in their methodology: reviewers only have to listen to the first 60 seconds of your song.

If you’re considering giving SoundOut a whirl, I highly recommend trying your hand as a scout on their sister site, Slicethepie. In just five minutes, you too can be one of the “real music fans and consumers” reviewing songs for SoundOut. You’ll start well below the minimum wage at $0.02 per review, but topperformers can level up to $0.20 a pop.

Hitting the play button starts the 60 second countdown until you can start typing your review. If you don’t come up with at least a couple quality sentences, it nags you to try harder. The elements in each track are not explicitly rated. Instead, the text of each review is analyzed, as evidenced by the scolding I received when one of my reviews was rejected:

“A review of the track would be good! You haven’t mentioned any of our expected musical terms - please try again…”

I didn’t appreciate the sarcasm after composing what I considered to be a very insightful review mentioning the production and drums - both of which are scored elements. This buggy behavior may explain my stellar air guitar scores. Perhaps my reviewers wrote “it would be NICE to hear some GUITAR” and the algorithm mistakenly connected the two words.

Even though I only selected electronic genres when I created my profile, I heard everything from mainstream rock/pop to hip hop, country, and metal. Reviewers are not matched to songs by genre. Everyone reviews everything, which opens us all up to Whitney Houston comparisons.

Conclusion

Can you tell if a song is great by listening to the first minute? No, but you can tell if it’s a hit.

If you operate in a niche genre, searching for your 1000 true fans, SoundOut may not be a good fit. For example, my best song doesn’t pay off until you hear the lyrical twist in the last chorus, and my “20% song” doesn’t have vocals for the first two minutes. With that in mind, how useful is a comprehensive analysis of the first 60 seconds? Less useful still when the data comes from reviewers who aren’t fluent in the genre.

While I have some reservations about their methodology, SoundOut is the fastest way I know of to get an unbiased opinion from a large sample of listeners. Use it wisely!

UPDATE: SoundOut posted a detailed response in the comments here.

Brian Hazard is a recording artist with sixteen years of experience promoting his eight Color Theory albums. His Passive Promotion blog emphasizes “set it and forget it” methods of music promotion. Brian is also the head mastering engineer and owner of Resonance Mastering in Huntington Beach, California.

Music,

Music,  SoundOut,

SoundOut,  music service |

music service |  1 Reference

1 Reference

Reader Comments (13)

I had a similar experience... and if the individual reviews had contained some spelling and grammar I might have paid a bit more attention to them. One clearly had trouble listening to one of my singer/songwriter songs as it was described as 'weird noise with no vocals or guitars or anything' but didn't think that maybe it wasn't streaming correctly.

I totally agree with this review. And his conclusion is dead-on: soundout rewards hit potential, nothing more, but that'c cool because that's exactly what they say they do.

What's not so cool is that their reviewers only have to listen to the first ten seconds of a song. I mean, for $40 per report, their process should be more intensive.

I also agree with Brian about the review analysis. I too had a song that got rated poorly for guitars when it had no guitars in it. Soundout shouldn't do this, it should give its reviewers the option to select NA.

Last, soundout should explain its mighty algorithm more. Where did it come from, who developed it, why should I believe it?

For a cheaper choice, I'd recommend braodjam, but it's got problems, too! Sigh...

Jeff

Brian, this is a great article! Love the feedback on the non-existing guitar ;)

There is another service that promises to tell you if you composed and produced a hit:

http://uplaya.com/

I have not tried it yet - just made a mental note when I read about it a few weeks ago. Have you looked into it? Like yourself I am producing electronic music and always wonder how anybody would be able to rate the hit potential of my song if they are consuming music outside our specific scene? Take any Deadmau5 track - any average music consumer wouldn't give it any potential I believe and yet the electronic music scene around the globe celebrates his music. It's all so subjective. Also brings up the question: what makes a hit in today's environment? Do you measure it by viral distribution, downloads, by airplay, or just by sales? To be continued...

Thanks for the kind words!

Jeff, reviewers have to listen to at least 60 seconds. Sharmita from SoundOut explains in the comments at Passive Promotion that most reviewers listen for at least 2 minutes. I believe that reflects 60 seconds of listening, and the rest typing while the music continues to play.

John, I have tried uPlaya. I fed it my John Lennon Songwriting Contest winner and it got a 5.0 (aka "keep trying"). Another song from the album (that got nowhere in the previous JLSC) got a 5.5. I'm not saying it's the world's greatest song or anything, but a panel of professionals thought it was better than mediocre, and I trust their judgment over an algorithm.

My guess is that uPlaya looks for repetition to see how the structure is laid out, like how long it takes to get to the first verse, chorus, etc. It's totally automated after all, so it can't judge lyrics. Maybe it's based on the Golden Mean!

Thanks for the post... I think it is an interesting model at least. I hadn't heard much about it beyond knowing that it existed so I appreciate the review. It sounds like it could be improved without significant effort. It also sounds like it could be less expensive.

Thanks again,

Tom Siegel

IndieLeap.com

This is sooooo bogus ... Like so many other internet traps newbies fall into ... all this is designed to do is suck money out of the pockets of wishful thinkers... " three 24-hour "Express Reports" (a $150 value)" geez ... Give me a break!

Get off you ass... get into the world and compete with other serious hopefuls and you'll soon learn the most important lesson of all ... how to self edit what you create in ways to get others excited about what you have to offer.

The internet has given more 'song sharks' more ways to fleece naive newcomers that the terrestrial world ever did. I really get mad about this kind of crap!

The only thing that worries me about this algorithm is that it can only work on set parameters i.e. compares your music to already successful music.

Maybe I'm ignorant (as per usual) but if you fed something new, groundbreaking and amazing into it you would probably get a low score. So by my own twisted logic the lower the score the more original the piece of music.

That may be true for uPlaya, which is entirely automated, but not for SoundOut, which uses real people. If reviewers can recognize groundbreaking genius in 60 seconds, it will be reflected in the scores.

Hi everyone, Sharmita here from SoundOut. Thanks again Brian for the review and post. As Brian said there was a detailed response on his blog to explain a couple of the queries/points raised and some which are covered in our FAQs (http://www.soundout.com/About/FAQs.aspx). I appreciate some may not have come across so clearly just from the report itself, and we are working to improve this as a direct result of Brian's feedback.

Before I paste the response I just want to make it really clear that it is not us personally or just algorithms that indicate a track's "hit" potential in the reports (we mean sales potential) - it is real feedback from real people, and we only use the web and our technology to aggregate the responses and then make some overall sense out of them in an easy to digest format. Ultimately in the report your track is positioned against over 50,000 other tracks that have been through the same review and rating process, and this is what determines its classification: Excellent (rates in top 5%), Very Good (top 15%), Good (top 30%), Average (top 60%), Below Average (bottom 40%).

Those 50,000 other tracks are a combination of major label releases (which go through SoundOut every week) and indie artists that use this service and our sister site Slicethepie.

Fontana Distribution (owned by Universal) has partnered with SoundOut as they want us to send them a percentage of top consumer rated tracks/artists to them on a monthly basis so they can present them to their A&R network at up to 80 labels in the US.

Ok so here's the response from Brian's original blog to save you linking through:

a) They promise to tell you how good your track is with guaranteed 95% accuracy (I’m still trying to wrap my brain around what that means)

We've tested SoundOut over and over again by randomly feeding the same track multiple times to a different (smart) crowd of 80 reviewers each time. The overall track rating you get in the reports falls within a 5% range, making SoundOut 95% accurate. Its not a coincidence – its just us harnessing Wisdom Of Crowds, a methodology used by Google and loads of other organisations to help accurate decision making. Please see our FAQs for more details about how this works.

The report positions how well your track is received against thousands of other tracks that have been reviewed and rated through SoundOut, and it is this which determines how good your track is in the broader market and within its own genre.

b) None of the songs are deemed worthy of being album tracks, much less singles. In the most important metric, Market Potential, my best song received a 54%, my worst 39%, and my favorite a pathetic 20%. Despite the huge swing in percentages, the track ratings only vary from 4.7 to 5.9, which implies Market Potential scores of 47% to 59%.

See above, SoundOut doesn’t tell you if your tracks are worthy or not for use. It objectively positions your track in the real world/broader market and against other tracks in its own genre. A track that is classified in the report as “album track” is one that rates in the top 30% of the thousands of other tracks that have been reviewed and rated through SoundOut. If it shows up as “single” its rates in the top 15% and “strong single” in the top 5% highest rated tracks. It’s a competitive market out there and not many songs make it as hits.

The Market Potential percentage is calculated using the track rating, the passion level rating for the track, and also takes into account how much the reviewers have talked about the "commerciality" of the track in their reviews (including references to radio friendliness, its hit potential etc.). The track rating indicates how good the track is in terms of quality and the passion rating identifies how much the reviewers liked it. The more the audience loved the track the more likely they would be to buy it.

Since Aug 2009 we have put every new release by the major labels through SoundOut, before the singles have been released (commercially or on radio). By subsequently mapping the SoundOut Market Potential ratings of the tracks against their post-release sales performance, this has shown a definitive correlation between the two and is why we are confident that SoundOut is a good indicator of a track's sales or hit potential. It should be noted however that the relative success of a single assumes a serious promotional budget, and also directly relates to the existing profile of an artist.

c) For better or for worse, those scores are weighted using “computational forensic linguistic technology and other proprietary SoundOut techniques.” Even the track rating score is weighted!

Track ratings are weighted according to each reviewer and their review history which we collect. The more experienced and accurate a reviewer has been in the past the more weight their opinion carries – this is to ensure that we deliver more accurate overall results. Please see our FAQ on “Can I trust the reviews? What motivates the reviewers to leave reviews? Are they compensated?” for more details.

d) Curiously, two of the same [similar tracks by well known artist] songs are listed on my 39% and 20% reports, which casts further doubt on the underlying algorithms.

Your tracks that received 39% and 20% fall into the same classification boundary (both range under 40%) – albeit one resonated more than the other. This is why you saw the same similar tracks by well known artists – as these show up according to the same classification boundary as your tracks (not by exact market potential percentage).

e) Still, it would be nice to know what criteria (if any) was used to select those 1,000 tracks {that compares your track within genre].

The 1000 tracks that your track is positioned against are the most recent ones that have been submitted through SoundOut and the same genre has been selected by the user.

f) The elements [in the Review Analysis] listed change from song to song. The only element that was consistently judged excellent is guitar, which is quite generous considering there’s no guitar in any of my songs.

The Review Analysis changes from song to song as it picks up the elements that are talked about most often in the 80 reviews – which can differ depending on the song. The technology used to “read” the reviews is pretty smart and is modelled on the human language so it can understand free text descriptions about music, emotions, moods, etc. more than just positives and negatives.

We have had the word “Bass” included under “Guitar” in reference to bass guitars - which is why this was picked up in your Review Analysis. We have now amended this as a result of your comments as we appreciate the word “Bass” is used differently in Electronic and Dance tracks than as "Bass Guitar".

g) Reviewers only have to listen to the first 60 seconds of your song…..With that in mind, how useful is a comprehensive analysis of the first 60 seconds?

That’s not entirely correct – reviewers have to listen to at least 60 seconds before they leave their rating and write their review (rather than only). This is to ensure they give a track a chance – we know that most reviewers typically listen to 2 mins to the whole track when reviewing.

h) Reviewers are not matched to songs by genre….Less useful still when the data comes from reviewers who aren’t fluent in the genre.

SoundOut randomly selects the 80 reviewers that review a track on purpose, as in order to create a “smart crowd” and deliver accurate overall results, the group has to be diverse and hold different opinions. Please see our FAQ on “How do you select the reviewers who rate and review my track? Why can't I select who reviews my track?” for more details about this.

i) If you operate in a niche genre, searching for your 1000 true fans, SoundOut may not be a good fit.

The genre preferences of the reviewers for SoundOut does broadly reflect the preference split in the real world – so we have more reviewers who are into Pop, Indie, R&B, Rock etc.

However, for this reason the SoundOut reports include the In Genre Classification where your track is positioned against thousands of other tracks in its own genre. So for a niche track – which will be targeted to a niche market only - it is this rating that will be more relevant to you. So, for example your SoundOut report could show a low Overall Market Potential rating e.g. 25% = Below Average – bottom 40% boundary – but the In Genre Classification might show Very Good – Single potential - meaning it falls in the top 15% of all tracks rated in that genre.

Well hope that helps explain SoundOut to you in more detail. As said please check out the site for details in our FAQs.

All the best,

Sharmita

SoundOut

Since we're not clicking through, I guess I need to copy/paste my response to the reponse! ;)

Thanks for your detailed response Sharmita!

Believe me, I pored over the FAQ as I wrote the article, and you've shared plenty of new information here. For example, classification boundaries and the fact that the track positioning chart is based on the most recent 1,000 reviewed tracks in the genre are nowhere to be found in the FAQ. I really appreciate the clarifications!

In regard to the 95% accuracy claim, I get the Wisdom of Crowds stuff. What I was "trying to wrap my brain around" was the distinction between accuracy and consistency. If you feed the same track to different groups and the result always falls within a 5% range, you've got consistency - but not necessarily accuracy.

Here's a non-musical analogy: If you get a group of folks together who have never tried sushi, and ask them to taste different items, they may consistently favor the California roll. Sushi fans would of course scoff.

Likewise, if you asked high school kids to review Mozart and Brahms, Mozart would almost certainly come out on top, consistently. But Brahms is better (there, I said it!). So why should we trust pop/rock fans to judge electronic, or hip-hop, or death metal for that matter? They may consistently favor tracks that are easy to digest, but if fans of the genre would prefer different tracks, the results aren't accurate. All the weighting in the world won't change that.

I applaud the decision to change your analysis tools to differentiate between bass and guitar. That seems like a useful distinction even in mainstream pop/rock. Even substituting bass for guitar in my reports, I don't find that section very useful. The inconsistency from song to song makes me question its accuracy. For example, only one report includes keyboard, which forms the basis of my instrumentation. Considering that the information is derived from only 80 reviews, I'd be surprised if any one element was mentioned more than a handful of times.

What if you let reviewers rate each element explicitly using additional 0-10 sliders? It might actually help focus their listening and improve the quality of their reviews, while encouraging them to listen to more of the song. More importantly, it would create a larger sample size and eliminate CFL errors.

Speaking of listening to more of the song, you're right - technically reviewers listen for longer than 60 seconds. My guess is that the first 60 seconds is spent listening and the rest is spent writing as the music continues to play. That's how I did it anyway. :)

Damn... the music biz is full of sharks....

if anyone is wondering, there is another company for this called audio kite music think tank wrote about here: http://www.musicthinktank.com/blog/what-artists-should-know-about-audiokite.html

seems better than soundout. has anyone used it?

I wrote about AudioKite here:

http://passivepromotion.com/what-artists-should-know-about-audiokite

And am hoping to do a shootout soon with AudioKite, SoundOut (including ReverbNation Crowd Review and TuneCore Track Smarts), Feature.fm, and Music Xray.